by Dot Cannon

What if technology could make decisions?

That day isn’t here–yet. But SENSORS Expo 2016 is exploring the ways it might arrive.

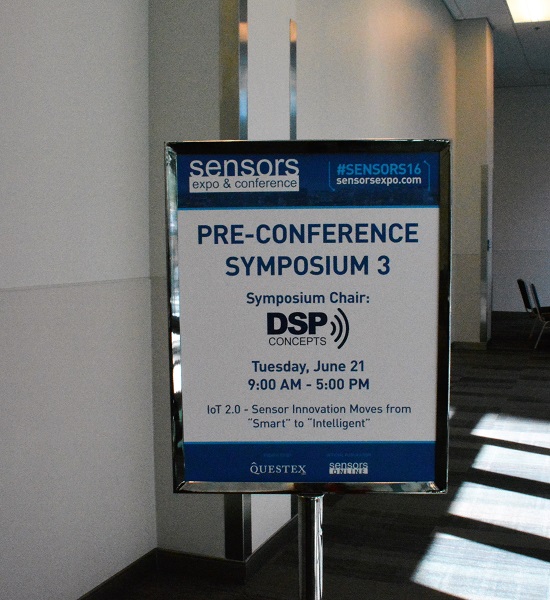

On Tuesday, DSP Concepts VP of Business Development Willard Tu hosted this pre-conference symposium at San Jose’s McEnery Convention Center.

“I think there are a lot of devices that we now call ‘smart’,” Will said, in his opening remarks. “Now we want to move to…where these devices are intelligent.”

He and the other speakers would go on to explain that “smart” devices collect information, while “intelligent” ones can use that data in an intuitive way.

“So a fitness tracker can tell me I need to get up and do a stretch right now, versus telling me how many steps I’ve taken,” Will explained. New technology, he said, could ultimately lead to that type of innovation.

“Now people talk about sensor fusion, sensor hubs, all these things that can increase (a device’s) level of intelligence.”

Some of the obstacles to boosting that intelligence, though, include massive amounts of data and limited resources.

“The size of the embedded market is very small,” Will said. “I’ve worked with 5-man teams. And (time is) always an issue. A little bit more than a year is what you’ve got, to make a new product.”

The first speaker Will introduced, Google Technical Program Manager Steve Malkos, discussed the ways Google is creating and upgrading its products towards intelligence, in his presentation,”Making Android Sensors and Location Work for You.”

Detailing some of Google’s new products, Steve said, “The coolest thing I think we’re doing this year is (augmented-reality interface) 6DOF (six degrees of freedom). With (this), augmented reality experiences will become even more immersive.”

He also talked about Google’s “N API” for wearables. “Extending capability, we’ll get a much finer-grained event which will correspond to the peak of each heartbeat, tell you if you’re overtraining, (analyze your sleep) and much more.”

At the core of Steve’s presentation was the ways Google is continuing to upgrade its GPS. He offered four pillars of context: coverage, accuracy, latency and power. “At Google we are always testing GPS,” he said. “With the Android sensor hub, we use all wireless radios on a motion device to understand (a user’s state and circumstances). And we want users to have a better understanding of themselves.”

“To truly understand…a user’s context we need to answer three key questions: location, activity, nearby.”

“Do you remember how many sensors were on the first Apple phone?” asked Yole Developpement Technology and Marketing Analyst Guillaume Girardin, at the start of his presentation. “It was three. Today we have twelve, (and) you will see…that many more are coming.”

Guillaume explained that sensor manufacturers were pushing hard for sensor fusion–which could lead to some interesting new consumer innovations.

“We believe that we are starting a new era of mobile photography,” he said. “You could be able to manipulate 3D shapes with wearables, like a photo lens on your head. The 3D camera technology is now mature. ”

Guillaume commented that the 3D camera was now less expensive than the new camera on a smartphone.

“With a 3D camera you can directly see through the eyes of the people you’re talking to. You can change the shape of your visage to transform into animals when you’re talking to young people, (and) you can create your own avatar in gaming.”

“I see a future that basically will allow us to do what major-league baseball is doing,” said Rhiza CEO Josh Knauer during his presentation. “(They have) sensor rays pointed at the field to show how quickly a player (reacts), their heart rate, are they sweating. My guess is, in about five years, we’re going to see that technology showing up in stores.”

Josh said that in the future, sensors would enable retailers to see where customers were looking. “Can we sense their emotions? Maybe the store wants to put certain items on sale right then. For those of you who believe the future is (buying on Amazon), I don’t believe that at all.”

Like several other speakers during the day, Josh said diverse technology was the key to making this happen.

“There’s never going to be one thing that rules them all…We need an ecosystem of different types of sensors that are brought together.”

Next, IntelliVision CEO Valdhi Nathan explored the ways video cameras are becoming more intelligent and connected. He outlined four different areas: smart cities, smart retail, smart home and smart machines.

Discussing smart retail, Valdhi said,”As you walk in the store, there are almost sixteen cameras tracking where you are, which aisles you walked, what you carried in the basket. This is coming in the next year.”

With the smart home, he said, home monitoring would be possible anywhere in the world. The cameras would detail what time a child arrived home, whether a package was delivered and when someone mowed the back yard.

“There’s going to be a garage camera very soon,” he said, “‘You’re coming home, I’m going to open the garage door.'”

But all this innovation doesn’t come without reservations. As the morning concluded with a discussion panel, Josh Knauer commented, “We should obviously (think of security). Now if I’m a carjacker, I can get in your house.”

“What is the next new sensor?” Will asked the panel.

Edoardo Gallizio, from STMicroelectronics, responded, “Programmable sensors that can reprogram themselves–commercial and environmental.”

“The biggest trend I see,” said Valdhi, “is biomedical body sensors.”

Panelist Marcellino Gemelli, of Bosch Sensortech, pointed out the ways sensors could lead to intelligent technology. “We have these sensor hubs. Moving forward, we see that these sensors can learn over time and adapt to what the user is doing. And this is true for motion, sound (and) video.”

“Right now, the mass market is pretty satisfied with mic quality (in consumer electronics),” said Mike Adell of Knowles. “Go to a Justin Bieber concert, there are 100,000 phones taking video, not one has good audio.”

“How far away are we from devices that attenuate?” asked an audience member.

“That’s happening now,” Mike said. “And it’s hard. You want to keep (sound) natural, 90 percent accuracy isn’t enough. (We need) 98 (percent).”

As the panel concluded Edoardo summed up the morning’s discussions. “Just a simple word: why? Why we are developing sensors, is to improve people’s lives.”