by Dot Cannon

“Initiating autonomous drive.”

With these words, on Wednesday, Intel treated riders to a 90-second simulation of the future. And this was just the beginning of the day at CES® 2018.

Taking passengers on a simulated drive through “New York City”, the simulator stopped for pedestrians, detected other vehicles on the road, and even began to communicate with technology at its “destination”.

“(This) actually gives you an opportunity to see what the autonomous vehicle sees,” said marketing associate Meghan Merrill.

“Obviously safety’s a big concern. So we want to highlight our robust sensory network. Cameras, LIDAR, radar, ultrasonics…you get a chance to see what it’s detecting on the road. We’re also showing our crowd-source mapping. So this is a way we’re making the vehicles more affordable.”

“We would like to have this out (on) the road by 2021,” Merrill continued.

“In the future, this could communicate with a smart home,…it could turn on your coffee pot, if you have a certain music you like, it could learn your preferences.”

And of course, this experience was just one of many game-changers.

CES® 2018’s official Day One had started with keynotes by CTA™ President and CEO Gary Shapiro, CES® and Corporate Business Strategy Vice President Karen Chupka and Ford Motor Company President Jim Hackett. The themes were connectivity, smart cities and interaction with residents.

“CES® is about connections, and it’s about connectivity,” Shapiro said.

Previewing the show, he mentioned a new 2018 addition. “For the first time, we have a Design & Source marketplace,” he told the audience.

During Hackett’s presentation, he outlined a vision for the future of smart cities. Behind him, on the screen, played an animated urban landscape.

“We’re referring to that as a living street,” he said. “In the future, the car and the system will be talking to each other. The city’s transportation grid (will rotate around what the cars need).”

The future of virtual reality

Unfortunately, heavy rain and traffic delayed our arrival at LVCC Tech East. We saw only part of an excellent panel on “Understanding AR and VR Content Challenges”, moderated by Consumer Technology Association’s Meenakshi Ramasubramanian.

But we did get to hear Vuze General Manager Jim Malcolm offer an intriguing prediction.

“From my perspective, it comes down to social,” he said. “The more we start to interact and live our lives inside a VR headset, you become very comfortable. Social VR is going to drive the future of virtual reality.”

On the Virtual Reality/Gaming show floor, later on, we completely fell in love with his Vuze 3 spherical cameras.

NASA and National Geographic use these for their VR video, and a short demo took us underwater to a coral reef, to a sidewalk cafe and to a shoreline with parasailers, in short order.

New aspects of gaming

In the Gaming and VR area, in LVCC’s South Hall, a couple of other favorite innovations included:

Getting to experience VR Eye Tracking! This Tobii technology allows a user to explode incoming “asteroids” just by looking at them!

And, there was a line for the demo upstairs for a more involved VR eyetracking experience. We did not want to leave this one!

We won’t ruin it by telling you what’s involved–just that it was well worth the wait. And that, should you have any concerns about dizziness, the landscape you’ll see is very stable, as well as a lot of fun.

Another intriguing find? This is the “3DRudder” foot motion controller for virtual reality! It allows a gamer–or anyone else using VR– to sit and be hands-free while experiencing virtual reality.

“By tilting the device, you move forward in the game, or the 3D application, whatever you are using,” explained 3D Rudder Founder and CEO Stanislas Chesnais.

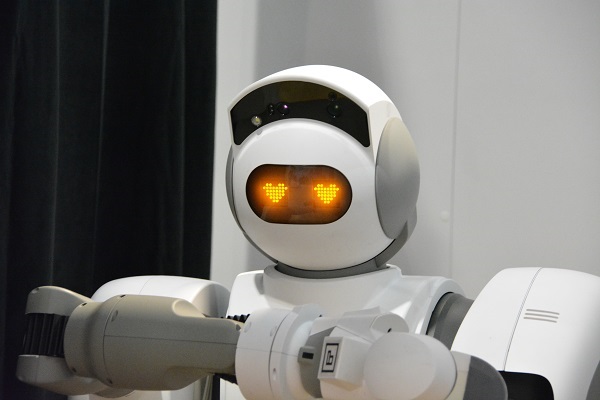

And, a cool robot

In the Robotics area of South Hall was a multifunctional in-home robot that could easily fall into the “I-want-one” category.

The Aeolus™ robot was doing a demo in which she (as demonstrator Cindy referred to her) picked up items from the floor. She also vacuumed, moved a chair–and fetched a Coke from an ice chest.

Aeolus Robotics President Alex Huang says he designed the Aeolus™ to gradually learn her environment, over time. Aeolus™ can distinguish between bringing a user a beer, soda, or a cup of coffee.

“The robot has…very strong artificial intelligence,” he explained. “The more it works for you, the (smarter) it is.”

Aeolus™, he added, is scheduled to be launched by the end of the year.

Amazing show, CES® 2018! See you on Day 2.